- Cloud Database Insider

- Posts

- Databricks Acquires Redash🤝|Databricks Acquires Tecton💵|Data Mesh Empowers Agentic AI Systymcs🌥️|My Review of the GCP Data Engineer Exam/Certification

Databricks Acquires Redash🤝|Databricks Acquires Tecton💵|Data Mesh Empowers Agentic AI Systymcs🌥️|My Review of the GCP Data Engineer Exam/Certification

Databricks went shopping. That Series K funding might be helping

What’s in today’s newsletter:

Databricks Acquires Redash to Boost Data Analytics🤝

Databricks Acquires Tecton for Real-Time AI Boost💵

Data Mesh Empowers Agentic AI Systems🌥️

Ataccama study finds poor data quality blocks AI 🚫

AI drives graph database growth for complex data insights 🧠

Also, check out the the weekly Deep Dive - My Review of the GCP Data Engineer Exam/Certification, and Everything Else in Cloud Databases.

AI leaders only: Get $100 to explore high-performance AI training data.

Train smarter AI with Shutterstock’s rights-cleared, enterprise-grade data across images, video, 3D, audio, and more—enriched by 20+ years of metadata. 600M+ assets and scalable licensing, We help AI teams improve performance and simplify data procurement. If you’re an AI decision maker, book a 30-minute call—qualified leads may receive a $100 Amazon gift card.

For complete terms and conditions, see the offer page.

DATABRICKS

TL;DR: Databricks acquired Redash to integrate user-friendly visualization with advanced analytics, enhancing collaboration, accessibility, and democratizing data insights across teams for improved decision-making.

Databricks acquired Redash to boost its data visualization and dashboarding capabilities within its analytics platform.

The integration combines Redash’s querying versatility with Databricks’ machine learning and data processing strengths.

This merger aims to enhance collaboration and data accessibility across teams, improving efficiency in insights generation.

The acquisition supports democratized data analytics, making powerful tools accessible to diverse users from analysts to data scientists.

Why this matters: Databricks’ acquisition of Redash enhances its platform by integrating versatile visualization with strong data processing, enabling easier collaboration and broader access to insights. This empowers diverse users to leverage data more effectively, positioning Databricks as a leader in making advanced analytics more accessible and actionable.

TL;DR: Databricks is acquiring Tecton to enhance real-time ML deployment, integrating feature store tech to unify workflows, reduce latency, and strengthen scalable AI agent capabilities on its Lakehouse Platform.

Databricks plans to acquire Tecton to enhance real-time machine learning model deployment capabilities.

Tecton's feature store simplifies feature engineering and reduces latency in AI model inference workflows.

The acquisition supports Databricks’ Lakehouse Platform vision of unifying data engineering, science, and ML.

This move boosts Databricks’ competitive edge in AI by enabling scalable, production-ready real-time AI agents.

Why this matters: Databricks acquiring Tecton enhances real-time AI deployment, crucial for applications like fraud detection and personalization. This integration streamlines machine learning workflows, strengthens Databricks’ market position, and accelerates enterprise adoption of scalable, efficient AI solutions, driving broader advancements in operational AI at scale.

DATABASE ARCHITECTURE

TL;DR: The data mesh pattern decentralizes data ownership, enhancing agentic AI's autonomy with real-time, domain-specific data, improving decision-making, speeding AI development, and driving enterprise digital transformation globally.

The data mesh pattern decentralizes data architecture, supporting agentic AI's need for real-time, domain-specific information.

Treating data as a product with domain ownership ensures higher quality and relevance for AI autonomous decision-making.

Data mesh enables AI agents to operate more independently by providing trustworthy, up-to-date datasets for complex tasks.

This approach fosters faster AI development, democratizes data access, and accelerates enterprise digital transformation.

Why this matters: Decentralized data mesh architecture crucially empowers agentic AI with real-time, high-quality domain data, enabling autonomous decisions and reducing centralized bottlenecks. This fosters faster AI innovation, democratizes data access, and accelerates digital transformation, fundamentally reshaping enterprise workflows towards greater efficiency and agility.

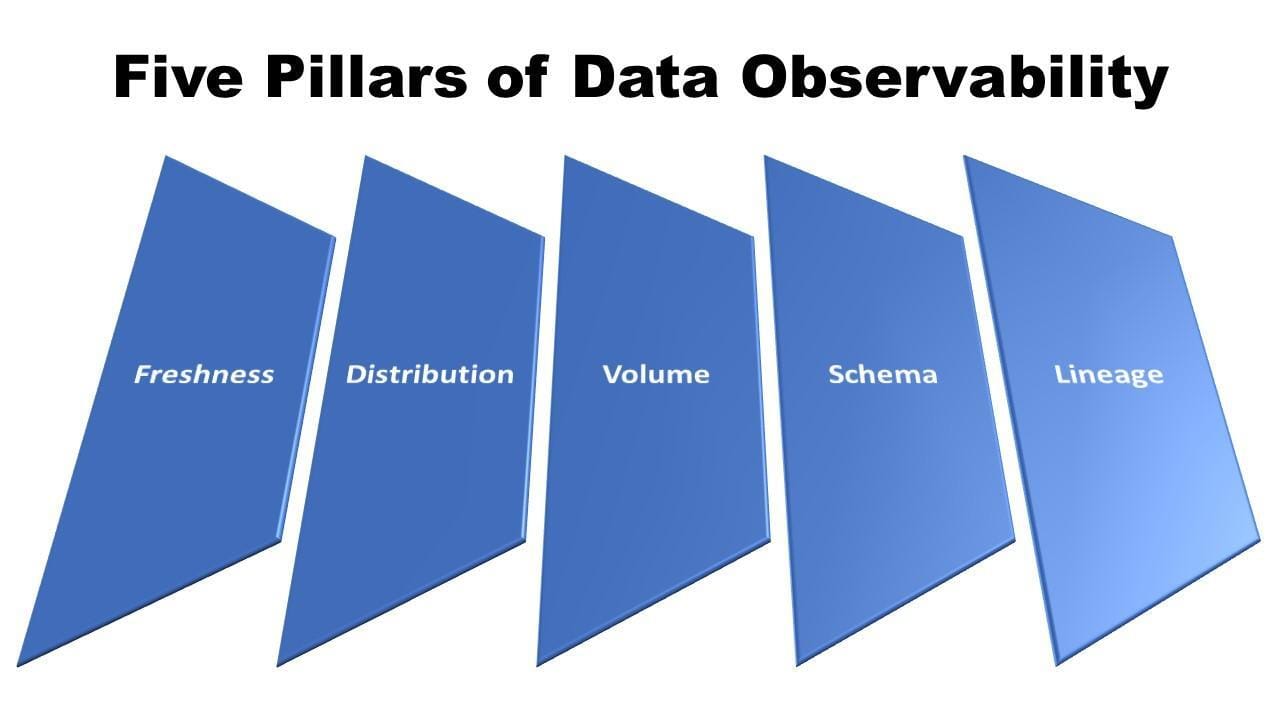

DATA OBSERVABILTY

TL;DR: Ataccama’s global study reveals poor data quality and governance hinder AI adoption and regulatory compliance, urging organizations to improve data frameworks to avoid risks and enhance AI-driven decision-making.

Ataccama's global study highlights critical data quality gaps hindering AI adoption and regulatory compliance efforts.

Many organizations face issues with incomplete and outdated data despite significant investments in AI technology.

Poor data governance complicates compliance with regulations like GDPR and CCPA, increasing organizational risks.

Improving data quality and governance is essential for successful AI projects, compliance, and competitive advantage.

Why this matters: Poor data quality undermines AI effectiveness and regulatory compliance, risking project failures and penalties. Organizations must strengthen data governance to fully leverage AI benefits, meet legal standards, and maintain competitive advantage in a data-driven market. Addressing these gaps is crucial for sustainable digital transformation and risk management.

GRAPH DATABASE

TL;DR: The AI boom is driving rapid growth in graph databases, which excel at handling complex relationships, boosting applications in healthcare, finance, and AI innovation while attracting significant market investment.

Graph databases are booming due to AI's need for managing complex, interconnected data effectively.

They store data as nodes and relationships, closely mimicking real-world connections for AI applications.

Industries like healthcare and finance use graph databases for enhanced recommendations, fraud detection, and semantic search.

Rising adoption drives AI innovation, better decision-making, and attracts strong investment in the graph database market.

Why this matters: Graph databases enable AI to process complex relationships more naturally, enhancing industries like healthcare and finance with better insights and fraud detection. Their growth fosters AI innovation, improves decisions, and attracts investment, marking a vital shift towards more advanced, relationship-focused data management.

EVERYTHING ELSE IN CLOUD DATABASES

AI-powered Cortex AI SQL transforms data querying

SQL Server 2025 Release Candidate 0 Announced

MySQL 8.0 Ends in 2026—Upgrade Now!

Cosmos DB boosts Microsoft Fabric's data power

EDB Reveals Lakehouse Future, AI Strategies at Summit

MariaDB acquires SkySQL to boost AI, serverless tech

Low uptake: Why Microsoft Purview lags behind

Domo Boosts BigQuery Integration with New Features

Snowflake’s Snowpark Connect increases cloud data power

DEEP DIVE

My Review of the GCP Data Engineer Exam/Certification

Don’t expect any NDA breeches here.

This exam was one of the first of the second batch of certifications that I have been piling up since the fall of 2021. I felt the winds of change inside my former employer, and outside, with the onslaught of new database technologies I saw coming at that time.

The first time I sat for the GCP DE exam was in June of 2022. This is when completing certification exams at home became a thing. Unthinkable back in 2002 when I completed my Oracle 8i OCP DBA designation. You can learn about my ordeal with that here.

Now onto last year, when the exam was going to lapse. I honestly did not prepare for that sitting, back in June of 2024. Let’s just say it did not go well.

As for this summer, I did indeed prepare intermittently by doing 3 passes of the de facto exam guide. More on the exam guide in a minute. I had some pretty good notes lying around in one of my many old fashioned notebooks. No Notion for me.

Now, I usually lock in the night before an exam in my office and prepare back and forth, in absolute silence. Not this time. I never do this, but I had some of the Pacific Northwest’s finest blasting in my headphones, while reading my notes. I think I studied for a grand total of 57 minutes, interspersed with the warbling of Dave Grohl (yes, I know he’s from Virginia, but you know what I mean), and Ben Gibbard .

Now, the current iteration of the exam is very different than 3 years ago. There is very little Machine learning stuff now. There is a pronounced emphasis on BigQuery and all of its accoutrements, and a lot of questions about Dataplex, Dataform, and Data Fusion.

Here some of the other subjects that I saw covered on the exam:

IAM

BigQuery

Spanner

Spark

Dataproc

Dataflow

Kafka

Apache Beam

Pub/Sub

Hadoop

BQ Omni

Bigtable

Data Mesh

DLP

Materialized Views

DAGS

Firestore

Memorystore

Dataplex

Dataform

Data Fusion

There is a bunch of other stuff, but these are from contemporaneous notes, one hour after I wrote the exam.

All I can really say if you are going to attempt this exam is to pay close attention to the official exam guide, and know what the GCP data services are best used for.

If you study, and keep those things in mind, you should have a good chance of passing the exam.

One more thing. I found the official exam guide that I bought in 2021 to be outdated. There may be more current ones available.

Also, I purchased a couple of practice exams from Udemy, and I did not find them incredibly helpful, so I am not going to link to them.

Gladstone Benjamin